成龙新片《传说》上映,AI换脸技术助其重返年轻

近日,万众瞩目的《神话》续篇——《传说》震撼登陆大银幕,由国际巨星成龙携手魅力女星娜扎倾情演绎,为观众带来一场跨越时空的视觉盛宴。尤为引人注目的是,这部影片开创...

2025-01-21

On August 18th, foreign media reported a disturbing trend involving AI-generated images of Elon Musk appearing in numerous fake advertisements. These deceptive deepfakes are poised to cause significant financial losses through fraudulent schemes.

A Tragic Tale of Trust and Betrayal

Consider the story of Steve Beauchamp, an 82-year-old retiree whose life savings were wiped out by a sophisticated scam. Last year, Beauchamp encountered a video that appeared to feature Elon Musk endorsing an enticing investment opportunity promising rapid returns. Intrigued by the prospect, he contacted the company promoting the project, opened an account, and initially deposited $248. Over several weeks, through a series of transactions, Beauchamp depleted his retirement fund, ultimately investing over $690,000.

However, these funds were subsequently stolen by cybercriminals using cutting-edge artificial intelligence techniques. The fraudsters manipulated a legitimate interview with Musk, employing AI tools to seamlessly alter his voice. This advanced technology enabled them to modify subtle mouth movements to align with the fabricated lines spoken by the digital imposter, making it challenging for the average viewer to detect the manipulation.

The Realism of Deception

Industry experts emphasize the astounding realism of these videos, which effectively mimic Musk's unique tone and South African accent. Recently, numerous AI-generated videos, dubbed "deep fakes," have proliferated online, including fraudulent Musk images that have deceived countless potential investors. Deloitte forecasts that AI-driven deep fakes could result in billions of dollars in fraud losses annually.

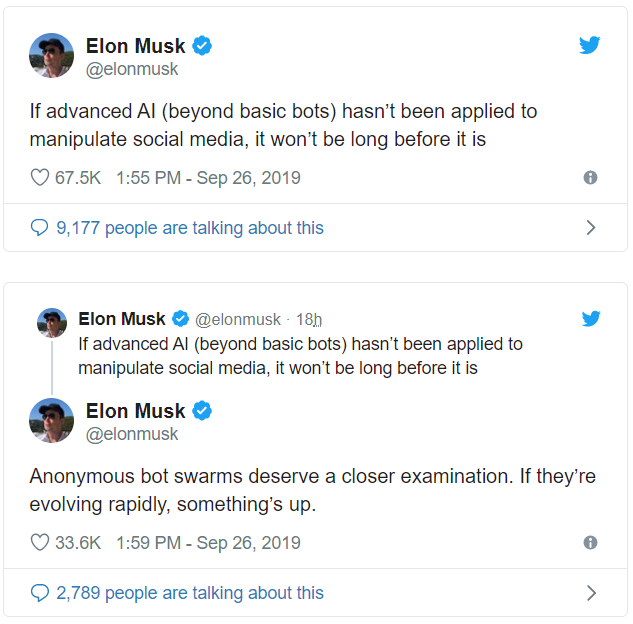

These videos are produced inexpensively within minutes and disseminated through social media platforms, including paid ads on Facebook, significantly extending their reach. As the AI industry continues to advance rapidly, the resulting fraudulent activities necessitate collaborative efforts from the AI industry and regulatory authorities to prevent misuse.

Q&A: Understanding the Risks of Deep Fakes

Q: What are deep fakes?

A: Deep fakes are hyper-realistic videos or audio recordings created using artificial intelligence to replace a person's likeness or voice with someone else's. These can be used for entertainment but also pose significant risks when employed for malicious purposes such as fraud.

Q: How do deep fakes work?

A: Deep fakes utilize machine learning algorithms, particularly those based on generative adversarial networks (GANs), to create realistic simulations. These algorithms analyze thousands of images or hours of video footage to learn and replicate specific facial expressions, voices, and other characteristics.

Q: Who is at risk from deep fake scams?

A: Anyone can be a target, but older adults and individuals unfamiliar with technology may be more susceptible. High-profile figures like Elon Musk are often used due to their recognizable faces and trusted public personas.

Q: How can one protect themselves from falling victim to deep fake scams?

A: Stay vigilant and skeptical about unsolicited investment opportunities, especially those promoted via social media. Verify the authenticity of any communication claiming to be from a well-known individual or organization before taking any action. Use trusted sources for investment advice and avoid clicking on suspicious links or downloading unknown attachments.

As the technology behind deep fakes becomes more accessible, it is crucial for both consumers and creators to remain aware of the potential dangers and take proactive measures to safeguard against them.

版权声明:本文内容由互联网用户自发贡献,本站不拥有所有权,不承担相关法律责任。如果发现本站有涉嫌抄袭的内容,欢迎发送邮件至 dousc@qq.com举报,并提供相关证据,一经查实,本站将立刻删除涉嫌侵权内容。

当前页面链接:https://lawala.cn/post/14769.html

相关文章

近日,万众瞩目的《神话》续篇——《传说》震撼登陆大银幕,由国际巨星成龙携手魅力女星娜扎倾情演绎,为观众带来一场跨越时空的视觉盛宴。尤为引人注目的是,这部影片开创...

2025-01-21

60岁的王阿姨在QQ上结识了一个自称是“战地医生”的网友,并在对方请求下准备向其转账4万元。银行工作人员发现异常并报警,警方及时赶到银行并制止了这笔转账。经过调...

2025-01-21

本文来自微信公众号“字母榜”,作者:马舒叶4月,网文创作者木木被一款AI写作工具种草了。定好故事大纲后,AI辅助生成的2万多字小说内容,不仅做到了上下文连贯能在...

2025-01-21

Picpic是什么?Picpic是北京奇点星宇科技有限公司开发的一款AI设计工具,利用人工智能技术帮助用户快速完成设计任务,提高设计效率和质量。通过AIbase...

2025-01-21

日前,快手视频生成大模型可灵AI宣布基础模型升级,并全面开放内测,同时正式上线付费会员体系。用户每日登录都可免费获得66灵感值,可用于兑换可灵AI平台内指定的功...

2025-01-21

随着《长相思2》的开播,观众不仅能够追剧能通过与剧中角色的互动体验成为故事的一部分。腾讯元宝公司为这部剧打造了角色AI,使粉丝能够与角色如小夭、玱玹、涂山璟、相...

2025-01-21

发表评论